4 Interactions¶

The goal of this chapter is to build an application that uses OpenXR actions to create interactivity. We will render numerous colored cubes for the user play with and build stuctures. First, we will update our build files and folders.

In the workspace directory, update the CMakeLists.txt by adding the following CMake code to the end of the file:

add_subdirectory(Chapter4)

Now, create a Chapter4 folder in the workspace directory and into that folder copy the main.cpp and CMakeLists.txt from Chapter3. In the Chapter4/CMakeLists.txt update these lines:

cmake_minimum_required(VERSION 3.22.1)

set(PROJECT_NAME OpenXRTutorialChapter4)

project("${PROJECT_NAME}")

Now build your project, you can run it to check that it behaves the same as your Chapter 3 code so far.

4.1 The OpenXR Interaction System¶

OpenXR interactions are defined in a two-way system with inputs such as motion-based poses, button presses, analogue controls, and outputs such as haptic controller vibrations. Collectively in OpenXR terminology, these are called Actions. An Action is a semantic object, not necessarily associated with a specific hardware control: for example, you would define a “Select” action, not an action for “press the A button”. You would define an action “walk”, not “left analogue stick position”. The specific device input or output that an Action is associated with, is in the realm of Bindings, which are defined by Interaction Profiles (see below).

Actions are contextual. They are grouped within Action Sets, which are again semantic and app-specific. For example, you might have an Action Set for “gameplay” and a different Action Set for “pause menu”. In more complex applications, you would create Action Sets for different situations - “driving car” and “walking” could be different Action Sets.

In this chapter, you’ll learn how to create an Action Set containing multiple Actions of different types. You’ll create a binding for your Actions with a simple controller profile, and optionally, with a profile specific to the device or devices you are testing.

4.2 Creating Actions and Action Sets¶

An OpenXR application has interactions with the user which can be user input to the application or haptic output to the user. In this chapter, we will create some interactions and show how this system works. The interaction system uses three core concepts: Spaces, Actions, and Bindings.

At the end of your OpenXRTutorial class, add this code:

XrActionSet m_actionSet;

// An action for grabbing blocks, and an action to change the color of a block.

XrAction m_grabCubeAction, m_spawnCubeAction, m_changeColorAction;

// The realtime states of these actions.

XrActionStateFloat m_grabState[2] = {{XR_TYPE_ACTION_STATE_FLOAT}, {XR_TYPE_ACTION_STATE_FLOAT}};

XrActionStateBoolean m_changeColorState[2] = {{XR_TYPE_ACTION_STATE_BOOLEAN}, {XR_TYPE_ACTION_STATE_BOOLEAN}};

XrActionStateBoolean m_spawnCubeState = {XR_TYPE_ACTION_STATE_BOOLEAN};

// The haptic output action for grabbing cubes.

XrAction m_buzzAction;

// The current haptic output value for each controller.

float m_buzz[2] = {0, 0};

// The action for getting the hand or controller position and orientation.

XrAction m_palmPoseAction;

// The XrPaths for left and right hand hands or controllers.

XrPath m_handPaths[2] = {0, 0};

// The spaces that represents the two hand poses.

XrSpace m_handPoseSpace[2];

XrActionStatePose m_handPoseState[2] = {{XR_TYPE_ACTION_STATE_POSE}, {XR_TYPE_ACTION_STATE_POSE}};

// The current poses obtained from the XrSpaces.

XrPosef m_handPose[2] = {

{{1.0f, 0.0f, 0.0f, 0.0f}, {0.0f, 0.0f, -m_viewHeightM}},

{{1.0f, 0.0f, 0.0f, 0.0f}, {0.0f, 0.0f, -m_viewHeightM}}};

Here, we have defined an Action Set: a group of related actions that are created together. The individual actions, such as m_grabAction and m_palmPoseAction, will belong to this set. For a pose action, we need an XrSpace, so m_handPoseSpace[] has been declared. And we’ll keep a copy of the pose itself for each hand, which will change per-frame. We initialize the hand poses to a predefined pose.

Action Sets are created before the session is initialized, so in Run(), after the call to GetSystemID(), add this line:

CreateActionSet();

After the definition of GetSystemID(), we’ll add these helper functions that convert a string into an XrPath, and vice-versa. Add:

XrPath CreateXrPath(const char *path_string) {

XrPath xrPath;

OPENXR_CHECK(xrStringToPath(m_xrInstance, path_string, &xrPath), "Failed to create XrPath from string.");

return xrPath;

}

std::string FromXrPath(XrPath path) {

uint32_t strl;

char text[XR_MAX_PATH_LENGTH];

XrResult res;

res = xrPathToString(m_xrInstance, path, XR_MAX_PATH_LENGTH, &strl, text);

std::string str;

if (res == XR_SUCCESS) {

str = text;

} else {

OPENXR_CHECK(res, "Failed to retrieve path.");

}

return str;

}

Now we will define the CreateActionSet() function. Add the first part of this function after FromXrPath():

void CreateActionSet() {

XrActionSetCreateInfo actionSetCI{XR_TYPE_ACTION_SET_CREATE_INFO};

// The internal name the runtime uses for this Action Set.

strncpy(actionSetCI.actionSetName, "openxr-tutorial-actionset", XR_MAX_ACTION_SET_NAME_SIZE);

// Localized names are required so there is a human-readable action name to show the user if they are rebinding Actions in an options screen.

strncpy(actionSetCI.localizedActionSetName, "OpenXR Tutorial ActionSet", XR_MAX_LOCALIZED_ACTION_SET_NAME_SIZE);

OPENXR_CHECK(xrCreateActionSet(m_xrInstance, &actionSetCI, &m_actionSet), "Failed to create ActionSet.");

// Set a priority: this comes into play when we have multiple Action Sets, and determines which Action takes priority in binding to a specific input.

actionSetCI.priority = 0;

An Action Set is a group of actions that apply in a specific context. You might have an Action Set for when your XR game is showing a pause menu or control panel and a different Action Set for in-game. There might be different Action Sets for different situations in an XR application: rowing in a boat, climbing a cliff, and so on. So you can create multiple Action Sets, but we only need one for this example. The Action Set is created with a name and a localized string for its description. Now add:

auto CreateAction = [this](XrAction &xrAction, const char *name, XrActionType xrActionType, std::vector<const char *> subaction_paths = {}) -> void {

XrActionCreateInfo actionCI{XR_TYPE_ACTION_CREATE_INFO};

// The type of action: float input, pose, haptic output etc.

actionCI.actionType = xrActionType;

// Subaction paths, e.g. left and right hand. To distinguish the same action performed on different devices.

std::vector<XrPath> subaction_xrpaths;

for (auto p : subaction_paths) {

subaction_xrpaths.push_back(CreateXrPath(p));

}

actionCI.countSubactionPaths = (uint32_t)subaction_xrpaths.size();

actionCI.subactionPaths = subaction_xrpaths.data();

// The internal name the runtime uses for this Action.

strncpy(actionCI.actionName, name, XR_MAX_ACTION_NAME_SIZE);

// Localized names are required so there is a human-readable action name to show the user if they are rebinding the Action in an options screen.

strncpy(actionCI.localizedActionName, name, XR_MAX_LOCALIZED_ACTION_NAME_SIZE);

OPENXR_CHECK(xrCreateAction(m_actionSet, &actionCI, &xrAction), "Failed to create Action.");

};

Here we’ve created each action with a little local lambda function CreateAction(). Each action has a name, a localized description, and the type of action it is. It also, optionally, has a list of sub-action paths. A sub-action is, essentially the same action on a different control device: left- or right-hand controllers for example.

// An Action for grabbing cubes.

CreateAction(m_grabCubeAction, "grab-cube", XR_ACTION_TYPE_FLOAT_INPUT, {"/user/hand/left", "/user/hand/right"});

CreateAction(m_spawnCubeAction, "spawn-cube", XR_ACTION_TYPE_BOOLEAN_INPUT);

CreateAction(m_changeColorAction, "change-color", XR_ACTION_TYPE_BOOLEAN_INPUT, {"/user/hand/left", "/user/hand/right"});

// An Action for the position of the palm of the user's hand - appropriate for the location of a grabbing Actions.

CreateAction(m_palmPoseAction, "palm-pose", XR_ACTION_TYPE_POSE_INPUT, {"/user/hand/left", "/user/hand/right"});

// An Action for a vibration output on one or other hand.

CreateAction(m_buzzAction, "buzz", XR_ACTION_TYPE_VIBRATION_OUTPUT, {"/user/hand/left", "/user/hand/right"});

// For later convenience we create the XrPaths for the subaction path names.

m_handPaths[0] = CreateXrPath("/user/hand/left");

m_handPaths[1] = CreateXrPath("/user/hand/right");

}

Each Action and Action Set has both a name, for internal use, and a localized description to show to the end-user. This is because the user may want to re-map actions from the default controls, so there must be a human-readable name to show them.

4.3 Interaction Profiles and Bindings¶

4.3.1 Bindings and Profiles¶

As OpenXR is an API for many different devices, it needs to provide a way for you as a developer to refer to the various buttons, joysticks, inputs and outputs that a device may have, without needing to know in advance which device or devices the user will have.

To do this, OpenXR defines the concept of interaction profiles. An interaction profile is a collection of interactions supported by a given device and runtime, and defined using textual paths.

Each interaction is defined by a path with three components: the Profile Path, the User Path, and the Component Path. The Profile Path has the form "/interaction_profiles/<vendor_name>/<type_name>". The User Path is a string identifying the controller device, e.g. "/user/hand/left", or "/user/gamepad". The Component Path is a string identifying the specific input or output, e.g. "/input/select/click" or "/output/haptic_left_trigger".

For example, the Khronos Simple Controller Profile has the path:

"/interaction_profiles/khr/simple_controller"

It has user paths

"/user/hand/left"

and

"/user/hand/right".

The component paths are:

"/input/select/click""/input/menu/click""/input/grip/pose""/input/aim/pose""/output/haptic"

Putting the three parts together, we might identify the select button on the left hand controller as:

profile + user + component

"/interaction_profiles/khr/simple_controller" + "/user/hand/left" + "/input/select/click"

"/interaction_profiles/khr/simple_controller/user/hand/left/input/select/click"

We will now show how to use these profiles in practice to suggest bindings between Actions and inputs or outputs.

4.3.2 Binding Interactions¶

We will set up bindings for the actions. A binding is a suggested correspondence between an action (which is app-defined), and the input/output on the user’s devices.

An XrPath is a 64-bit number that uniquely identifies any given forward-slash-delimited path string, allowing us to refer to paths without putting cumbersome string-handling in our runtime code. After the call to CreateActionSet() in Run(), add the line:

SuggestBindings();

After the definition of CreateActionSet(), add:

void SuggestBindings() {

auto SuggestBindings = [this](const char *profile_path, std::vector<XrActionSuggestedBinding> bindings) -> bool {

// The application can call xrSuggestInteractionProfileBindings once per interaction profile that it supports.

XrInteractionProfileSuggestedBinding interactionProfileSuggestedBinding{XR_TYPE_INTERACTION_PROFILE_SUGGESTED_BINDING};

interactionProfileSuggestedBinding.interactionProfile = CreateXrPath(profile_path);

interactionProfileSuggestedBinding.suggestedBindings = bindings.data();

interactionProfileSuggestedBinding.countSuggestedBindings = (uint32_t)bindings.size();

if (xrSuggestInteractionProfileBindings(m_xrInstance, &interactionProfileSuggestedBinding) == XrResult::XR_SUCCESS)

return true;

XR_TUT_LOG("Failed to suggest bindings with " << profile_path);

return false;

};

Using the lambda function SuggestBindings, we call into OpenXR with a given list of suggestions. Let’s try this:

bool any_ok = false;

// Each Action here has two paths, one for each SubAction path.

any_ok |= SuggestBindings("/interaction_profiles/khr/simple_controller", {{m_changeColorAction, CreateXrPath("/user/hand/left/input/select/click")},

{m_grabCubeAction, CreateXrPath("/user/hand/right/input/select/click")},

{m_palmPoseAction, CreateXrPath("/user/hand/left/input/grip/pose")},

{m_palmPoseAction, CreateXrPath("/user/hand/right/input/grip/pose")},

{m_buzzAction, CreateXrPath("/user/hand/left/output/haptic")},

{m_buzzAction, CreateXrPath("/user/hand/right/output/haptic")}});

Here, we create a proposed match-up between our app-specific actions and XrPaths which refer to specific controls as interpreted by the Runtime. We call xrSuggestInteractionProfileBindings. If the user’s device supports the given profile ( and "/interaction_profiles/khr/simple_controller" should usually be supported in any OpenXR runtime), it will recognize these paths and can map them to its own controls. If the user’s device does not support a profile, the bindings will be ignored.

The suggested bindings are not guaranteed to be used: that’s up to the runtime. Some runtimes allow users to override the default bindings, and OpenXR expects this.

For OpenXR hand controllers, we distinguish between a “grip pose”, representing the orientation of the handle of the device, and an “aim pose” - which is oriented where the device “points”. The relative orientations of these will vary between different controller configurations, so again, it’s important to test with the devices you have. Let the runtime worry about adaptating to different devices that you haven’t tested.

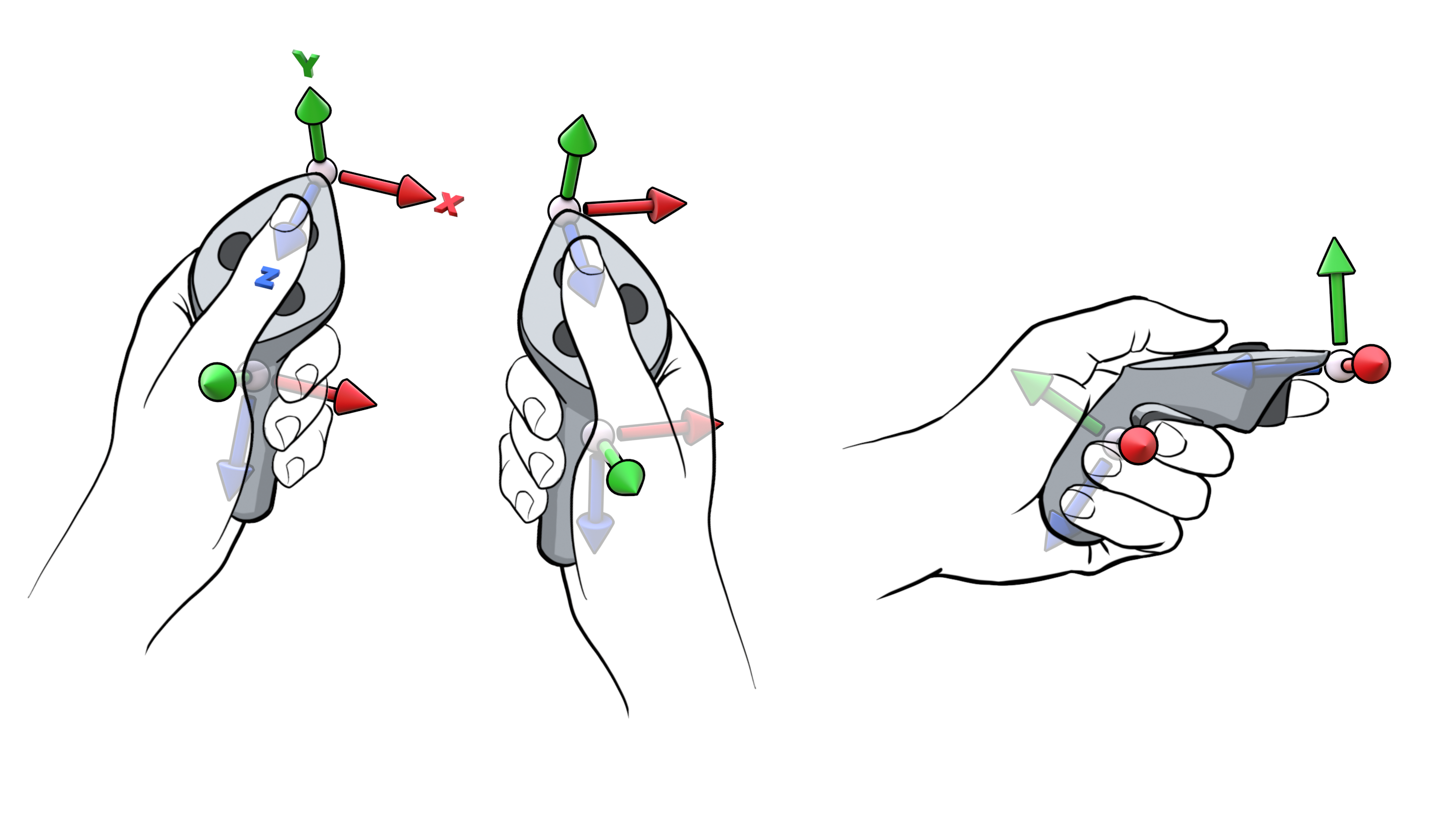

Standard Grip and Aim poses for OpenXR Controllers.¶

The next part depends on what hardware you will be testing on. It’s optional: the Khronos Simple Controller should work on any OpenXR runtime/device combination. But it has limitations - not least that there are no floating-point controls. If you have an Oculus Quest, whether building natively or running on PC VR via a streaming system, the native profile is called "/interaction_profiles/oculus/touch_controller", and you can insert the following:

// Each Action here has two paths, one for each SubAction path.

any_ok |= SuggestBindings("/interaction_profiles/oculus/touch_controller", {{m_grabCubeAction, CreateXrPath("/user/hand/left/input/squeeze/value")},

{m_grabCubeAction, CreateXrPath("/user/hand/right/input/squeeze/value")},

{m_spawnCubeAction, CreateXrPath("/user/hand/right/input/a/click")},

{m_changeColorAction, CreateXrPath("/user/hand/left/input/trigger/value")},

{m_changeColorAction, CreateXrPath("/user/hand/right/input/trigger/value")},

{m_palmPoseAction, CreateXrPath("/user/hand/left/input/grip/pose")},

{m_palmPoseAction, CreateXrPath("/user/hand/right/input/grip/pose")},

{m_buzzAction, CreateXrPath("/user/hand/left/output/haptic")},

{m_buzzAction, CreateXrPath("/user/hand/right/output/haptic")}});

The main apparent difference is that the grab action is now analogue ("squeeze/value" rather than "select/click"). But you should never assume that the same path means exactly the same behaviour on different profiles. So again, only implement profiles that you can test with their associated hardware, and test every profile that you implement.

We now close out the function, and add RecordCurrentBindings() to report how the runtime has actually bound your actions to your devices.

if (!any_ok) {

DEBUG_BREAK;

}

}

void RecordCurrentBindings() {

if (m_session) {

// now we are ready to:

XrInteractionProfileState interactionProfile = {XR_TYPE_INTERACTION_PROFILE_STATE, 0, 0};

// for each action, what is the binding?

OPENXR_CHECK(xrGetCurrentInteractionProfile(m_session, m_handPaths[0], &interactionProfile), "Failed to get profile.");

if (interactionProfile.interactionProfile) {

XR_TUT_LOG("user/hand/left ActiveProfile " << FromXrPath(interactionProfile.interactionProfile).c_str());

}

OPENXR_CHECK(xrGetCurrentInteractionProfile(m_session, m_handPaths[1], &interactionProfile), "Failed to get profile.");

if (interactionProfile.interactionProfile) {

XR_TUT_LOG("user/hand/right ActiveProfile " << FromXrPath(interactionProfile.interactionProfile).c_str());

}

}

}

In PollEvents(), in the switch case for XR_TYPE_EVENT_DATA_INTERACTION_PROFILE_CHANGED, before the break; directive, insert this:

RecordCurrentBindings();

Now, on startup and if the interaction profile changes for any reason, the active binding will be reported.

Theory and Best Practices for Interaction Profiles¶

To get the best results from your application on the end-user’s device, it is important to understand the key principles behind the Interaction Profile system. These are laid out in the OpenXR Guide (https://github.com/KhronosGroup/OpenXR-Guide/blob/main/chapters/goals_design_philosophy.md) but in brief:

An application written for OpenXR should work without modification on device/runtime combination, even those created after the application has been written.

An OpenXR device and runtime should work with any OpenXR application, even those not tested with that device.

The way this is achieved is as follows: Usually, each device will have its own “native” profile, and should also support "khr/simple_controller". As a developer:

You should test the devices and runtimes you have.

You should specify profile bindings for each device you have tested.

You should not implement profiles you have not tested.

It is the runtime’s responsibility to support non-native profiles where possible, either automatically, or with the aid of user-specified rebinding. A device can support any number of interaction profiles, either the nine profiles defined in the OpenXR standard, or an extension profile (see https://registry.khronos.org/OpenXR/specs/1.0/html/xrspec.html#_adding_input_sources_via_extensions).

See also semantic-path-interaction-profiles.

4.4 Using Actions in the Application¶

Action Sets and Suggested Bindings are created before the session is initialized. There is a session-specific setup to be done for our actions also. After the call to CreateSession(), add:

CreateActionPoses();

AttachActionSet();

Now after the definition of SuggestBindings(), add:

void CreateActionPoses() {

// Create an xrSpace for a pose action.

auto CreateActionPoseSpace = [this](XrSession session, XrAction xrAction, const char *subaction_path = nullptr) -> XrSpace {

XrSpace xrSpace;

const XrPosef xrPoseIdentity = {{0.0f, 0.0f, 0.0f, 1.0f}, {0.0f, 0.0f, 0.0f}};

// Create frame of reference for a pose action

XrActionSpaceCreateInfo actionSpaceCI{XR_TYPE_ACTION_SPACE_CREATE_INFO};

actionSpaceCI.action = xrAction;

actionSpaceCI.poseInActionSpace = xrPoseIdentity;

if (subaction_path)

actionSpaceCI.subactionPath = CreateXrPath(subaction_path);

OPENXR_CHECK(xrCreateActionSpace(session, &actionSpaceCI, &xrSpace), "Failed to create ActionSpace.");

return xrSpace;

};

m_handPoseSpace[0] = CreateActionPoseSpace(m_session, m_palmPoseAction, "/user/hand/left");

m_handPoseSpace[1] = CreateActionPoseSpace(m_session, m_palmPoseAction, "/user/hand/right");

}

Here, we’re creating the XrSpace that represents the hand pose actions. As with the reference space, we use an identity XrPosef to indicate that we’ll take the pose as-is, without offsets.

Finally, as far as action setup goes, we will attach the Action Set to the session. Add this function:

void AttachActionSet() {

// Attach the action set we just made to the session. We could attach multiple action sets!

XrSessionActionSetsAttachInfo actionSetAttachInfo{XR_TYPE_SESSION_ACTION_SETS_ATTACH_INFO};

actionSetAttachInfo.countActionSets = 1;

actionSetAttachInfo.actionSets = &m_actionSet;

OPENXR_CHECK(xrAttachSessionActionSets(m_session, &actionSetAttachInfo), "Failed to attach ActionSet to Session.");

}

As you can see, it’s possible here to attach multiple Action Sets. But xrAttachSessionActionSets can only be called once per session. You have to know what Action Sets you will be using before the session can start - xrBeginSession() is called from PollEvents() once all setup is complete and the application is ready to proceed.

Now, we must poll the actions, once per-frame. Add these two calls in RenderFrame() just before the call to RenderLayer():

// poll actions here because they require a predicted display time, which we've only just obtained.

PollActions(frameState.predictedDisplayTime);

// Handle the interaction between the user and the 3D blocks.

BlockInteraction();

And add this function after the definition of PollEvents():

void PollActions(XrTime predictedTime) {

// Update our action set with up-to-date input data.

// First, we specify the actionSet we are polling.

XrActiveActionSet activeActionSet{};

activeActionSet.actionSet = m_actionSet;

activeActionSet.subactionPath = XR_NULL_PATH;

// Now we sync the Actions to make sure they have current data.

XrActionsSyncInfo actionsSyncInfo{XR_TYPE_ACTIONS_SYNC_INFO};

actionsSyncInfo.countActiveActionSets = 1;

actionsSyncInfo.activeActionSets = &activeActionSet;

OPENXR_CHECK(xrSyncActions(m_session, &actionsSyncInfo), "Failed to sync Actions.");

Here we enable the Action Set we’re interested in (in our case we have only one), and tell OpenXR to prepare the actions’ per-frame data with xrSyncActions().

XrActionStateGetInfo actionStateGetInfo{XR_TYPE_ACTION_STATE_GET_INFO};

// We pose a single Action, twice - once for each subAction Path.

actionStateGetInfo.action = m_palmPoseAction;

// For each hand, get the pose state if possible.

for (int i = 0; i < 2; i++) {

// Specify the subAction Path.

actionStateGetInfo.subactionPath = m_handPaths[i];

OPENXR_CHECK(xrGetActionStatePose(m_session, &actionStateGetInfo, &m_handPoseState[i]), "Failed to get Pose State.");

if (m_handPoseState[i].isActive) {

XrSpaceLocation spaceLocation{XR_TYPE_SPACE_LOCATION};

XrResult res = xrLocateSpace(m_handPoseSpace[i], m_localSpace, predictedTime, &spaceLocation);

if (XR_UNQUALIFIED_SUCCESS(res) &&

(spaceLocation.locationFlags & XR_SPACE_LOCATION_POSITION_VALID_BIT) != 0 &&

(spaceLocation.locationFlags & XR_SPACE_LOCATION_ORIENTATION_VALID_BIT) != 0) {

m_handPose[i] = spaceLocation.pose;

} else {

m_handPoseState[i].isActive = false;

}

}

}

If, and only if the action is active, we use xrLocateSpace to obtain the current pose of the controller. We specify that we want this relative to our reference space localOrStageSpace, because this is the global space we’re using for rendering. If we fail to locate the current pose of the controller, we set XrActionStatePose ::isActive to false. We’ll use leftGripPose in the next section to render the controller’s position.

We’ll add the grabbing Action.

for (int i = 0; i < 2; i++) {

actionStateGetInfo.action = m_grabCubeAction;

actionStateGetInfo.subactionPath = m_handPaths[i];

OPENXR_CHECK(xrGetActionStateFloat(m_session, &actionStateGetInfo, &m_grabState[i]), "Failed to get Float State of Grab Cube action.");

}

for (int i = 0; i < 2; i++) {

actionStateGetInfo.action = m_changeColorAction;

actionStateGetInfo.subactionPath = m_handPaths[i];

OPENXR_CHECK(xrGetActionStateBoolean(m_session, &actionStateGetInfo, &m_changeColorState[i]), "Failed to get Boolean State of Change Color action.");

}

// The Spawn Cube action has no subActionPath:

{

actionStateGetInfo.action = m_spawnCubeAction;

actionStateGetInfo.subactionPath = 0;

OPENXR_CHECK(xrGetActionStateBoolean(m_session, &actionStateGetInfo, &m_spawnCubeState), "Failed to get Boolean State of Spawn Cube action.");

}

Finally in this function, we’ll add the haptic buzz behaviour, which has variable amplitude.

for (int i = 0; i < 2; i++) {

m_buzz[i] *= 0.5f;

if (m_buzz[i] < 0.01f)

m_buzz[i] = 0.0f;

XrHapticVibration vibration{XR_TYPE_HAPTIC_VIBRATION};

vibration.amplitude = m_buzz[i];

vibration.duration = XR_MIN_HAPTIC_DURATION;

vibration.frequency = XR_FREQUENCY_UNSPECIFIED;

XrHapticActionInfo hapticActionInfo{XR_TYPE_HAPTIC_ACTION_INFO};

hapticActionInfo.action = m_buzzAction;

hapticActionInfo.subactionPath = m_handPaths[i];

OPENXR_CHECK(xrApplyHapticFeedback(m_session, &hapticActionInfo, (XrHapticBaseHeader *)&vibration), "Failed to apply haptic feedback.");

}

}

Now we’ve completed polling all the actions in the application. Let’s introduce something to interact with. After your declaration of m_pipeline and before XrActionSet m_actionSet, we will declare some interactable 3D blocks. Add:

// An instance of a 3d colored block.

struct Block {

XrPosef pose;

XrVector3f scale;

XrVector3f color;

};

// The list of block instances.

std::vector<Block> m_blocks;

// Don't let too many m_blocks get created.

const size_t m_maxBlockCount = 100;

// Which block, if any, is being held by each of the user's hands or controllers.

int m_grabbedBlock[2] = {-1, -1};

// Which block, if any, is nearby to each hand or controller.

int m_nearBlock[2] = {-1, -1};

We will add two more functions after the definition of PollActions to enable some interaction between the user and the 3D blocks:

// Helper function to snap a 3D position to the nearest 10cm

static XrVector3f FixPosition(XrVector3f pos) {

int x = int(std::nearbyint(pos.x * 10.f));

int y = int(std::nearbyint(pos.y * 10.f));

int z = int(std::nearbyint(pos.z * 10.f));

pos.x = float(x) / 10.f;

pos.y = float(y) / 10.f;

pos.z = float(z) / 10.f;

return pos;

}

// Handle the interaction between the user's hands, the grab action, and the 3D blocks.

void BlockInteraction() {

// For each hand:

for (int i = 0; i < 2; i++) {

float nearest = 1.0f;

// If not currently holding a block:

if (m_grabbedBlock[i] == -1) {

m_nearBlock[i] = -1;

// Only if the pose was detected this frame:

if (m_handPoseState[i].isActive) {

// For each block:

for (int j = 0; j < m_blocks.size(); j++) {

auto block = m_blocks[j];

// How far is it from the hand to this block?

XrVector3f diff = block.pose.position - m_handPose[i].position;

float distance = std::max(fabs(diff.x), std::max(fabs(diff.y), fabs(diff.z)));

if (distance < 0.05f && distance < nearest) {

m_nearBlock[i] = j;

nearest = distance;

}

}

}

if (m_nearBlock[i] != -1) {

if (m_grabState[i].isActive && m_grabState[i].currentState > 0.5f) {

m_grabbedBlock[i] = m_nearBlock[i];

m_buzz[i] = 1.0f;

} else if (m_changeColorState[i].isActive == XR_TRUE && m_changeColorState[i].currentState == XR_FALSE && m_changeColorState[i].changedSinceLastSync == XR_TRUE) {

auto &thisBlock = m_blocks[m_nearBlock[i]];

XrVector3f color = {pseudorandom_distribution(pseudo_random_generator), pseudorandom_distribution(pseudo_random_generator), pseudorandom_distribution(pseudo_random_generator)};

thisBlock.color = color;

}

} else {

// not near a block? We can spawn one.

if (m_spawnCubeState.isActive == XR_TRUE && m_spawnCubeState.currentState == XR_FALSE && m_spawnCubeState.changedSinceLastSync == XR_TRUE && m_blocks.size() < m_maxBlockCount) {

XrQuaternionf q = {0.0f, 0.0f, 0.0f, 1.0f};

XrVector3f color = {pseudorandom_distribution(pseudo_random_generator), pseudorandom_distribution(pseudo_random_generator), pseudorandom_distribution(pseudo_random_generator)};

m_blocks.push_back({{q, FixPosition(m_handPose[i].position)}, {0.095f, 0.095f, 0.095f}, color});

}

}

} else {

m_nearBlock[i] = m_grabbedBlock[i];

if (m_handPoseState[i].isActive)

m_blocks[m_grabbedBlock[i]].pose.position = m_handPose[i].position;

if (!m_grabState[i].isActive || m_grabState[i].currentState < 0.5f) {

m_blocks[m_grabbedBlock[i]].pose.position = FixPosition(m_blocks[m_grabbedBlock[i]].pose.position);

m_grabbedBlock[i] = -1;

m_buzz[i] = 0.2f;

}

}

}

}

4.5 Rendering the Controller Position¶

We will now draw some geometry to represent the controller poses. We already have a mathematics library from a previous chapter, but we will need a couple more headers for std::min(), std::max() and for generating pseudo-random colors.

Add this after #include <xr_linear_algebra.h>:

// Include <algorithm> for std::min and max

#include <algorithm>

// Random numbers for colorful blocks

#include <random>

static std::uniform_real_distribution<float> pseudorandom_distribution(0, 1.f);

static std::mt19937 pseudo_random_generator;

Inside our CreateResources() method, locate where we set the variable numberOfCuboids and update it as follows:

size_t numberOfCuboids = 64 + 2 + 2;

We will render 64 interactable cubes, two cuboids representing the controllers and a further two for the floor and table from the previous chapter. Inside our CreateResources() method and after the call to m_graphicsAPI->CreatePipeline(), add the following code that m_vertexBuffersets up the orientation, position and color of each interactable cube.

// Create sixty-four cubic blocks, 20cm wide, evenly distributed,

// and randomly colored.

float scale = 0.2f;

// Center the blocks a little way from the origin.

XrVector3f center = {0.0f, -0.2f, -0.7f};

for (int i = 0; i < 4; i++) {

float x = scale * (float(i) - 1.5f) + center.x;

for (int j = 0; j < 4; j++) {

float y = scale * (float(j) - 1.5f) + center.y;

for (int k = 0; k < 4; k++) {

float angleRad = 0;

float z = scale * (float(k) - 1.5f) + center.z;

// No rotation

XrQuaternionf q = {0.0f, 0.0f, 0.0f, 1.0f};

// A random color.

XrVector3f color = {pseudorandom_distribution(pseudo_random_generator), pseudorandom_distribution(pseudo_random_generator), pseudorandom_distribution(pseudo_random_generator)};

m_blocks.push_back({{q, {x, y, z}}, {0.095f, 0.095f, 0.095f}, color});

}

}

}

Recall that we’ve already inserted a call to PollActions() in the function RenderFrame(), so we’re ready to render the controller position and input values. In the RenderLayer() method, after we render the floor and table,(i.e. just before m_graphicsAPI->EndRendering()) render the controller positions and interactable cubes, add the following code:

// Draw some blocks at the controller positions:

for (int j = 0; j < 2; j++) {

if (m_handPoseState[j].isActive) {

RenderCuboid(m_handPose[j], {0.02f, 0.04f, 0.10f}, {1.f, 1.f, 1.f});

}

}

for (int j = 0; j < m_blocks.size(); j++) {

auto &thisBlock = m_blocks[j];

XrVector3f sc = thisBlock.scale;

if (j == m_nearBlock[0] || j == m_nearBlock[1])

sc = thisBlock.scale * 1.05f;

RenderCuboid(thisBlock.pose, sc, thisBlock.color);

}

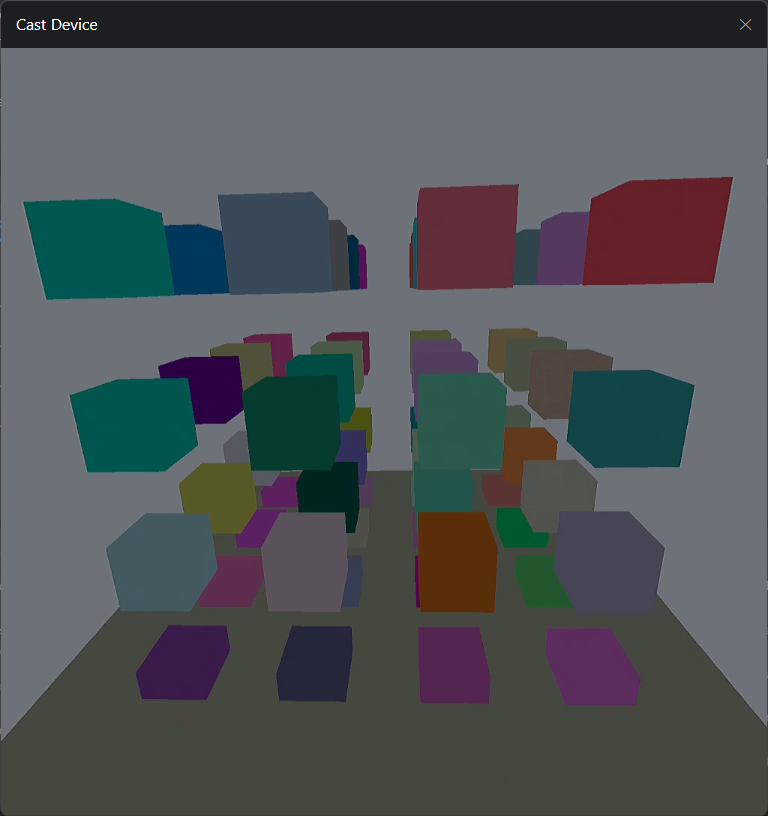

Now build and run your application. You should see something like this:

You should also be able to grab and move the cubes with the controllers.

4.6 Checking for Connected Controllers¶

Look again now at the function PollActions(). We specify which action to look at with the XrActionStateGetInfo struct. Then we use a type-specific call. For our boolean Grab Action, we call xrGetActionStateBoolean to retrieve an XrActionStateBoolean struct. This specifies whether the value of the boolean is true or false, and we can use this to determine whether the user is pressing the specified button on the controller.

However, the struct XrActionStateBoolean also has a member called isActive, which is true if the state of the action is actually being read. If it’s false, the value of currentState is irrelevant - the polling failed.

Similarly, XrActionStateFloat has a floating-point currentState value, which is valid if isActive is true. The struct has changedSinceLastSync, which is true if the value changed between the previous and current calls to xrSyncActions. And it has lastChangeTime, which is the time at which the value last changed. This allows us to be very precise about when the user pressed the button, and how long they held it down for. This could be used to detect “long presses”, or double-clicks.

Careful use of this polling metadata will help you to create applications that are responsive and intuitive to use. Bear in mind as well that multiple physical controls could be bound to the same action, and the user could be using more than one controller at once. See the OpenXR spec. for more details.

4.7 Summary¶

In this chapter, you have learned about Actions, Controller Profiles, Bindings. You’ve learned the principles and best practices of the action systm: how to specify bindings, and when to use different binding profiles. And you’ve created interactions for your app that demonstrate these principles in practice.

Below is a download link to a zip archive for this chapter containing all the C++ and CMake code for all platform and graphics APIs.

Version: v0.0.0